Seemingly overnight our social media feeds have been filled with artificial intelligence tools: AI-generated avatars, AI-generated poems, and screenshots of chat conversations with long-dead historical figures. In a relatively short span of time, artificial intelligence tools have moved from feeling on-the-fringe, hard-to-access, haven’t-heard-of-it-technology toward easy-to-access, everyday-user-friendly, it’s-in-the-news-tools.

Much of the reaction to this is excitement and curiosity. Anne-Laure Le Cunff calls these artificial creativity tools because they unlock our ability to create new content more quickly and with the assistance of the computer. Within the education sector though there has been particular alarm surrounding the ability for artificial intelligence tools like ChatGPT by OpenAI to help students cheat at school, even though the tools to write essays have been available since at least 2021.

Several researchers and tech pundits have advocated we consider tools like ChatGPT a calculator for writing. Imagine if we had tried to implement a permanent ban on calculators within schools. Much like a calculator is a tool for computing numbers, artificial intelligence tools are just that… tools for generating content. And similar to a modern math class and its relationship to calculators, we need to teach students how to use these tools in order to successfully navigate the world they’re now living in.

Read on for a look at why banning artificial intelligence tools won’t work, examples of why these tools are dumber than they appear, and an idea on how we can change student assignments to measure thinking in new ways.

Students will access artificial intelligence tools anyway

Most every teacher has witnessed K-12 students’ creatively bypassing firewalls to access supposedly banned content on district networks. Whether it’s a games site or a video-watching site, they seem to find a way. Thus, it feels easy to declare that, as soon as ChatGPT domains were blocked by district administrators, students started getting to work to find tunnels through district firewalls to the very tool that’s now on the “no” list.

And even if network admins could successfully block all ChatGPT access, what about the new artificial intelligence tools that have launched since ChatGPT? What about sites that integrate content generation tools within their existing products? What about students accessing these tools when not on the district network or device?

The endless game of whack-a-mole is a losing battle that was lost before it started. Instead of trying to block tools like ChatGPT, we need to define rules for using tools like ChatGPT.

In the same way that norms and expectations set boundaries around the use of now commonplace technologies like calculators, it’s important to clarify for students how and when using (and disclosing) content generation tools will be considered acceptable versus considered cheating.

The nonprofits Quill and CommonLit have released a toolkit to help educators navigate this set of discussions if you’re looking for ideas to get started.

AI content generation tools don’t know all the facts (right now)

There are many examples of ChatGPT demonstrating seemingly amazing depths of knowledge. If you search you can find examples of the chatbot answering questions about college-level astrophysics and taking law school exams. And there are plenty of examples of content generation tools successfully crafting responses to primary- and secondary-level essay prompts that might be assigned as homework.

These interactions may wrongly convince us that the content generation tools are smarter than they appear.

Superintendent shifts: 2 big retirements, one sudden dismissal and lots of new leaders

Under the hood, large language model tools like ChatGPT work like a giant math problem relying on statistical probabilities. The words almost magically appearing on the page are appearing there because they are statistically likely to be near each other when compared to the vast texts of the internet. Hopefully, it’s no surprise to hear that some things on the internet are not true.

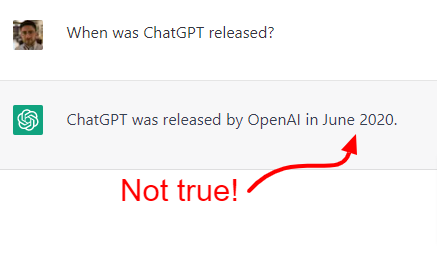

To put it plainly, tools like ChatGPT don’t (yet) understand the text that is being generated in response to user prompts. Ask ChatGPT a few more questions beyond the entertaining prompts like “write a haiku appropriate for explaining the water cycle” and you can quickly find examples of the AI writing compelling prose that has very bad facts. The tool doesn’t “know” when it’s wrong.

For example, when asked, ChatGPT said that it was released in June 2020, even though the correct date was November 2022. ChatGPT was asked when it was released. It got the date wrong.

One reporter asked ChatGPT to write about the first woman president of the United States. Another reporter sat in on a high-school class discussion and tried (without success) to have ChatGPT answer questions as part of the discussion about a novel. Even in a professional context, AI-generated content is proving unreliable. Online media outlet CNET used an AI tool to generate more than 75 articles that were human-edited. Even so, their articles were inaccurate.

It can be hard to balance the very impressive content that computers can generate with our expectations for true computer “intelligence” as we see in science fiction movies. Yes, AI content generation tools clearly have the potential to help with producing rich content very quickly. It is truly astounding that any internet user can try out this type of technology right now, for free.

But today’s reality is also that artificial intelligence tools are not yet able to fully replace a human who must be in charge of evaluating and understanding the ideas expressed by the computer. Maybe tools like Google’s Bard will be able to generate text and have better mechanisms to validate the facts, too, but until then, as my college journalism professor Chuck Stone liked to say, “If your mother says she loves you, check it out.”

What’s needed is different types of assignments

So what about the impact on all the students and their essays? In the near term, there may be some tools that can detect some content written by AI tools. In fact, OpenAI itself has published a tool that tries to “indicate” AI-generated text. And there are other tools like DetectGPT and GPTZero which might help. But these tools, like restricting access, are only a stop-gap measure.

To assess learning in a world where text can be generated on-demand by students, the teachers will need to adapt to new assignments that measure student thinking in new ways. One very compelling proposal I’ve encountered is called zero trust homework, offered by Ben Thompson on his website Stratechery. He proposes a future where students are required to use a school-provided content generation tool as part of assignments. The tool would work alongside students, and students would be required to edit and validate the content that’s getting produced.

Sometimes, for the benefit of students’ learning, the tool would purposefully generate compellingly-written but seriously flawed content for the students to deconstruct and analyze. This approach feels strongly in alignment with the idea of preparing students with 21st-century skills. It requires higher-order thinking from students to engage with the arguments of the AI engine, and it is a skill that will be useful for succeeding in the world of today and tomorrow.

Students have been using AI-powered tools like Socratic and Grammarly to aid in their learning for some time, and now, with tools like ChatGPT by OpenAI and Wordtune by AI21 Labs, educators are accepting that the next generation of more powerful tools has arrived.

Maybe it’s helpful to think of tools like ChatGPT as autopilot for an airplane. Yes, the tool can guide the plane through many scenarios, but ultimately, the pilot needs to know how to fly the plane, too.

Content generation tools aren’t going to go away just because we don’t like them. Educators will need to assume students have access to these tools, and our role going forward will be to help students learn to think critically in a world where these tools exist.